@simon_brooke

i did a google of @TheBreadmonkey's holiday picture, and it gave me the right location and possible hotel it was taken from.

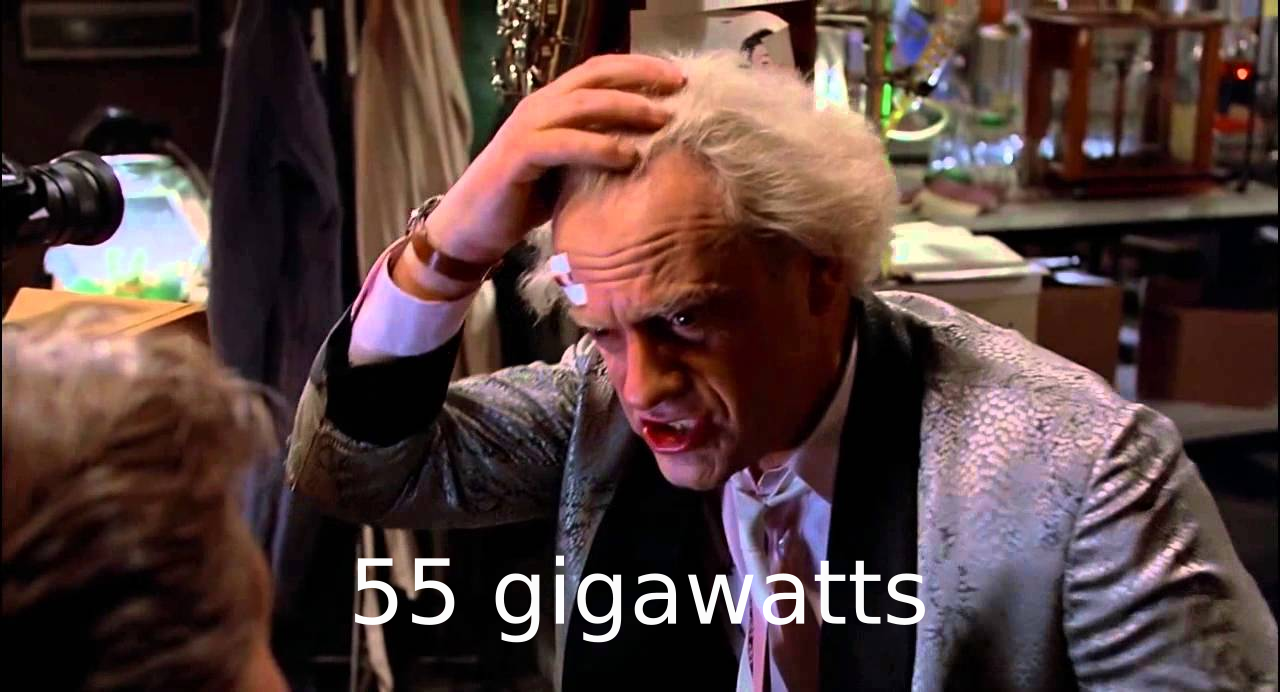

The total amount of global AI compute power in 2025 is estimated to be approximately 79 million NVIDIA H100 GPU equivalents across the top ten nations, with the U.S. having the largest share. The overall power required to run this capacity is estimated at around 55 gigawatts (GW).

https://www.mescomputing.com/news/ai/the-top-10-most-ai-dominant-countries-in-2025-report

New York City: Averages 5,500 MW

gw=1e9

mw=1e6

55e9/55e8=10 New York Cities

The NVIDIA H100 GPU offers immense compute power, reaching up to ~1 PetaFLOPS

FLOPS = Floating Point Operations Per Second

It is a meaningful way to measure performance in CPU intensive operations, like computational fluid dynamics. It's still used some in the super-computing benchmark arena.

It's not a meaningful measure of personal computing performance because most operations in your PC are not complex calculations. Much of the PC's work is moving data around or operating system activities that are short on complex matrix math.

https://www.reddit.com/r/explainlikeimfive/comments/5j820e/eli5_what_is_flops_and_is_it_a_meaningful_way_to/

peta=1e15

1e15*8e7=8e22 Floating Point Operations Per Second

@glasspusher