Did it ever occur to you why your computer has a fan, and why that fan usually stays quiet until the machine actually starts doing something?

When your laptop sits idle, very little is happening electrically. Modern processors are extremely aggressive about not working unless they have to. Large parts of the chip are clock-gated or power-gated entirely. No clock edges means no switching. No switching means almost no dynamic power use. At idle, a modern CPU is mostly just maintaining state, sipping energy to keep memory alive and respond to interrupts.

The moment real work starts, that changes.

Every clock tick forces millions or billions of transistors to switch, charge and discharge tiny capacitors, and move electrons through resistive paths. That switching energy turns directly into heat. More clock cycles per second means more switching. More switching means more heat. Clock equals work, and work equals heat.

This is why performance and temperature rise together. When you compile code, render video, or train a model, the clock ramps up, voltage often increases, and the chip suddenly dissipates tens or hundreds of watts instead of one or two watts.

The fan turns on not because the computer is panicking, but because physics is being obeyed.

Even when transistors are not switching, hot silicon still consumes power. As temperature increases, leakage currents increase exponentially. Electrons start slipping through transistors that are supposed to be off. This leakage does no useful work. It simply generates more heat, which increases temperature further, which increases leakage again. This feedback loop is one of the reasons temperature limits exist at all, and ultimately why we have fans – to keep the system under load below this critical temperature.

Above roughly 100 C, this leakage becomes a serious design concern for modern chips. Not because silicon melts (that's above 1400ºC) or stops working, but because efficiency collapses.

You spend more and more energy just keeping the circuit alive, not computing. To compensate, designers must lower clock speeds, increase timing margins, or raise voltage, all of which reduce performance per watt.

Reliability also suffers. High temperature accelerates wear mechanisms inside the chip. Metal atoms in interconnects slowly migrate. Insulating layers degrade. Transistors age faster. A chip running hot all the time will not live as long as one kept cooler, even if it technically functions.

This is why cooling exists, and why it scales with workload: It exists to keep the chip in a temperature range where switching dominates over leakage, where clocks can run fast without excessive voltage, and where the hardware will still be alive years from now.

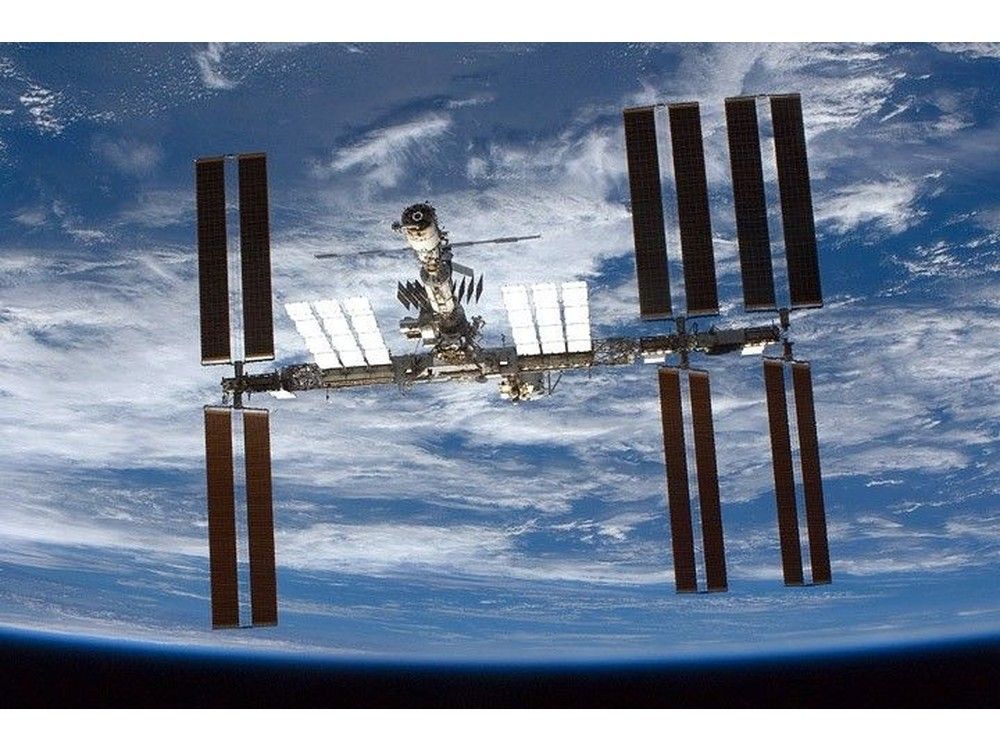

In space, where you cannot rely on air or liquid to carry heat away, this tradeoff becomes unavoidable and very visible.

- Run hotter, and you can radiate heat more easily.

- Run hotter, and your electronics become slower, leakier, and shorter-lived.

RE: https://infosec.exchange/@isotopp/116018308301580721

Insightful thread on running electronics in space. Spoiler alert, GPUs do not work up there. Dear journalists, please read this 7 times before relaying information about datacentres in orbit.

#space